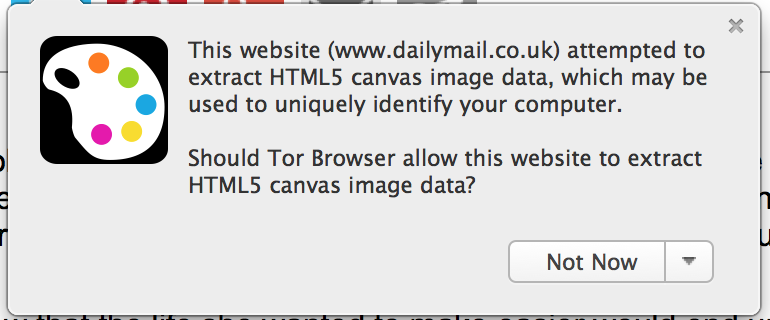

Tor browser’s canvas fingerprinting warning.

Image: Tor Browser

Question from the NIST group

I had a question recently from members of a NIST technical working group to which I belong, asking me to help explain “canvas fingerprinting.” This privacy-invading online technique received attention in late July in the press, when researchers revealed the extent to which your browser text formatting and other settings can invisibly reveal your identity and activities online. Evil spawn of “browser fingerprinting,” a set of online privacy attacks first widely reported starting in 2010, “canvas fingerprinting” uses seemingly harmless information from your browser to create a kind of online DNA that identifies individuals with statistical certainly.

I was curious about how canvas fingerprinting related in general to “browser fingerprinting,” cookie respawning, evercookies, cookie synching, and other hidden privacy attacks, and how to defend against them. Rebecca Herold, a privacy expert who leads our NIST working group, invited me to review the topic with the group at an upcoming meeting.

When I got to the attack defenses, I found the answer, an old friend: Tor browser. I was not surprised, yet...surprised. Not surprised, yes, as millions of users all over the world know that, when privacy is the problem, Tor is the answer. Surprised, yes, as I had not been aware of how extensively this research and paper referenced Tor, nor how significantly Tor had stood out in its browser experiments. My own research sometimes involves Tor, and I am a member of the “Tor community,” so I expect to be (sort of) on top of “significant” Tor research.

I don’t focus as much on the browser as on Tor’s anonymity-protecting “onion routing.” Tor includes a software browser, as well as other online tools, but is mainly thought of as a network of thousands of relay servers all over the world, as well as using a cryptographic technique that wraps your network communications in layers of encryption. In reading recent press to find how I had missed this one, I realized: I was not alone. Tor’s browser strengths went unreported in virtually all press about canvas fingerprinting, despite the researcher’s startling finding that Tor Browser, alone among dozens of defenses against the widespread attack, is “the only software that we found to successfully protect against canvas fingerprinting.”

Image: NIST

Attack and Defense

“Canvas fingerprinting,” “browser fingerprinting,” respawning, evercookies, cookie synching. As quickly as researchers discover them, new ones abound. Advertisers and marketers have created a hidden world, abetted by invisible technology, that spies and reports on you in order to sell you, presumably as a prospective buyer. Another hidden world of government surveillance grabs from the same toolbox, we learned from the Snowden revelations. Researchers and computer developers at Cambridge, MA-based Tor Project have devoted their talents and time specifically to protect privacy online. Tor is cited in the canvas fingerprinting research reported July 22, 2014, for producing tools forming some of the only lines of defense, among dozens of browser and defense options investigated. Diaz et al concluded, “As for more traditional fingerprinting techniques, the Tor browser again appears to be the only effective tool. With the exception of a recent Mozilla effort to limit plugin enumeration, browser manufacturers have not attempted to build in defenses against fingerprinting.”

The incidental delivery mechanism of Tor’s onion routing anonymity-protecting network, Tor browser itself is less widely considered. So I dusted off and cracked open the Tor browser specification document to see what I had been missing.

I also read all the original research papers. Press reports are nice, but you never know, with them, how far as accuracy and completeness go. Here is what I found out overall about canvas fingerprinting:

Who’s doing it? Who figured it out? When did they find it, and when was it reported?

Six researchers from KU Leuven in the Netherlands and Princeton University in the US teamed up to locate the canvas fingerprinting “in the wild,” in actual websites. Researchers included Gunes Acar, Christian Eubank, Steven Englehardt, Marc Juarez, Arvind Narayanan, and Claudia Diaz. They wrote a paper, The Web never forgets: Persistent tracking mechanisms in the wild, that technically has yet to be “published,” ("forthcoming" November, 2014 in the academic journal In Proceedings of CCS 2014, Nov. 2014. Yet, it’s already published on the web, the latest version dated August 10, 2014. Confusing? Yes.)

They built on the work first presented in 2012, as theory, in a research paper by Keaton Mowery and Hovav Shacham. Researchers flushed out adware company AddThis’s computer code, along with others, on 5 percent of the top 100,000 websites, or 5,000 websites.

How does canvas fingerprinting work?

It turns out, our browsers each use a more unique set of fonts and text settings than we realize. When a user visits a website with canvas fingerprinting, their browser is instructed to "draw" a sample hidden line of text or 3D graphic that is then converted to a digital token (hashed). It exploits the HTML5 canvas element, also known as the the Canvas API. This includes the set of fonts and the settings a user uses to format text and images in the browser. The Tor browser specification actually sums it up best:

“The adversary simply renders WebGL, font, and named color data to a Canvas element, extracts the image buffer, and computes a hash of that image data. Subtle differences in the video card, font packs, and even font and graphics library versions allow the adversary to produce a stable, simple, high-entropy fingerprint of a computer. In fact, the hash of the rendered image can be used used almost identically to a tracking cookie by the web server.”

There are so many online privacy attacks, it’s hard to keep them all straight. When did these attacks start? When did we know about them?

Canvas fingerprinting is a kind of privacy attack known as “browser fingerprinting.” The “browser fingerprinting.”attack was made (somewhat) famous in 2010 by in the Panopticlick project by Peter Eckersley, a Senior Staff Technologist at the Electronic Frontier Foundation (EFF). He presented his findings in How Unique is your Browser? at Defcon and published them in How Unique is your Web Browser? at the Privacy Enhancing Technology Symposium in 2010. Eckersley showed that seemingly harmless browser settings and information more uniquely identify us than we realize.

Keaton Mowery and Hovav Shacham identified canvas fingerprinting, in theory, in their 2012 paper. Then, early in 2014, Princeton and KU Leuven researchers experimented and found an example “in the wild.” The actual research paper will not even be published until November 2014, in the academic journal of the ACM Conference on Computer and Communications Security (CCS). On July 22, 2014, ProPublica’s blog reported on it, the day after acceptance notifications went out for the ACM CCS publication. Acar also published an article in ieee.org magazine on the same day.

What are these seemingly harmless pieces of information that reveal us?

I compiled this list from Eckersley’s Defcon presentation and the research papers:

Eckersley provided this helpful guide to show which data is most revealing:

Variable Entropy- a measure of how much of a giveaway each is about your identity:

Are there related attacks?

In 2009, a study by Soltani et al. showed the abuse of Flash cookies for regenerating previously removed HTTP cookies, a technique referred to as “respawning.” In 2010: Samy Kamkar demonstrated the “Evercookie," a resilient tracking mechanism that utilizes multiple storage vectors including Flash cookies, localStorage, sessionStorage and ETags. Cookie syncing, a workaround to the Same-Origin Policy, allows different trackers to share user identifiers with each other. Besides being hard to detect, cookie syncing enables back-end server-to-server data merges hidden from public view. The 2014 Persistent paper significantly extends what was known about each in terms of extent and methodology.

The Defense

Eckersley noted in 2010 that Tor topped a very short list of defenses against browser fingerprinting:

“Which browsers did well?

Paradox: some “privacy enhancing” technologies are fingerprintable

Flash blockers

Some forged User Agents

“Privoxy” or “Browzar”

Noteworthy exceptions:

NoScript

TorButton [predecessor to Tor browser]”

Researchers Diaz et al likewise offered users a short list of defenses in July 2014, stating, “Tor Browser [was] the only software that we found to successfully protect against canvas fingerprinting. Specifically, the Tor Browser returns an empty image from all the canvas functions that can be used to read image data. The user is then shown a dialog where she may permit trusted sites to access the canvas. We confirmed the validity of this approach when visiting a site we built which performs browser fingerprinting.”

With respect to other browser-based attacks, researchers observed, “The Tor Browser Bundle (TBB) prevents cross-site cookie tracking by disabling all third-party cookies, and not storing any persistent data such as cookies, cache or localStorage.”

Finally, with respect to evercookies, “The straightforward way to defend against evercookies is to clear all possible storage locations. The long list of items removed by the Tor Browser when a user switches to a new identity provides a hint of what can be stored in unexpected corners of the browser: “searchbox and findbox text, HTTP auth, SSL state, OCSP state, site-specific content preferences (including HSTS state), content and image cache, offline cache, Cookies, DOM storage, DOM local storage, the safe browsing key, and the Google wifi geolocation token[...]” .

When I first learned I had missed that the widely-publicized July 21, 2014 canvas fingerprinting attack research included significant positive findings about Tor browser as a defense, I realized I had read the press, but not the underlying full research paper. I read dozens of research papers, but can’t read every one that comes out. I immediately went to FreeHaven, the definitive anonymity bibliography, for clues as to how I missed that this was a “Tor” paper. And I got my answer. The actual research paper was - Not.There. FreeHaven seems only to include papers after they’re “officially” “published.” In this case, that won’t be until sometime in November, 2014.

Further sleuthing uncovered that most of the press coverage likewise failed to even mention the key defense the Persistent paper mentions over and over again: Tor browser. Of the eight press articles on the researcher’s website, only two of them even mention Tor: Pro Publica, and BBC. In none of the other articles does the word “Tor” even appear, even in the article by EFF, a previous funder of Tor.

Articles about Tor appear in the press everyday. I read some accurate, informative and interesting articles. I read all sorts of nonsense about Tor in the press, too. I wish the press had done an article about how, of all the dozens of browsers and tools available in the world today, Tor browser defends against these kinds of attacks. If I as an infosec researcher

don’t hear about it, how can I expect the press to get the story? The good people at Tor are so busy creating really effective software and online services, maybe they don’t always have time to help their press get the full story.

Clark, Erinn, Murdoch, Steven, and Perry, Mike, The Design and Implementation of the Tor Browser [DRAFT] March 15, 2013 https://www.torproject.org/projects/torbrowser/design

Mowery, Keaton and Shachem, Hovav, Pixel Perfect: Fingerprinting Canvas in HTML5, In Proceedings of W2SP 2012. IEEE Computer Society, May 2012. http://w2spconf.com/2012/papers/w2sp12-final4.pdf

The Tor Project,

Tor Project